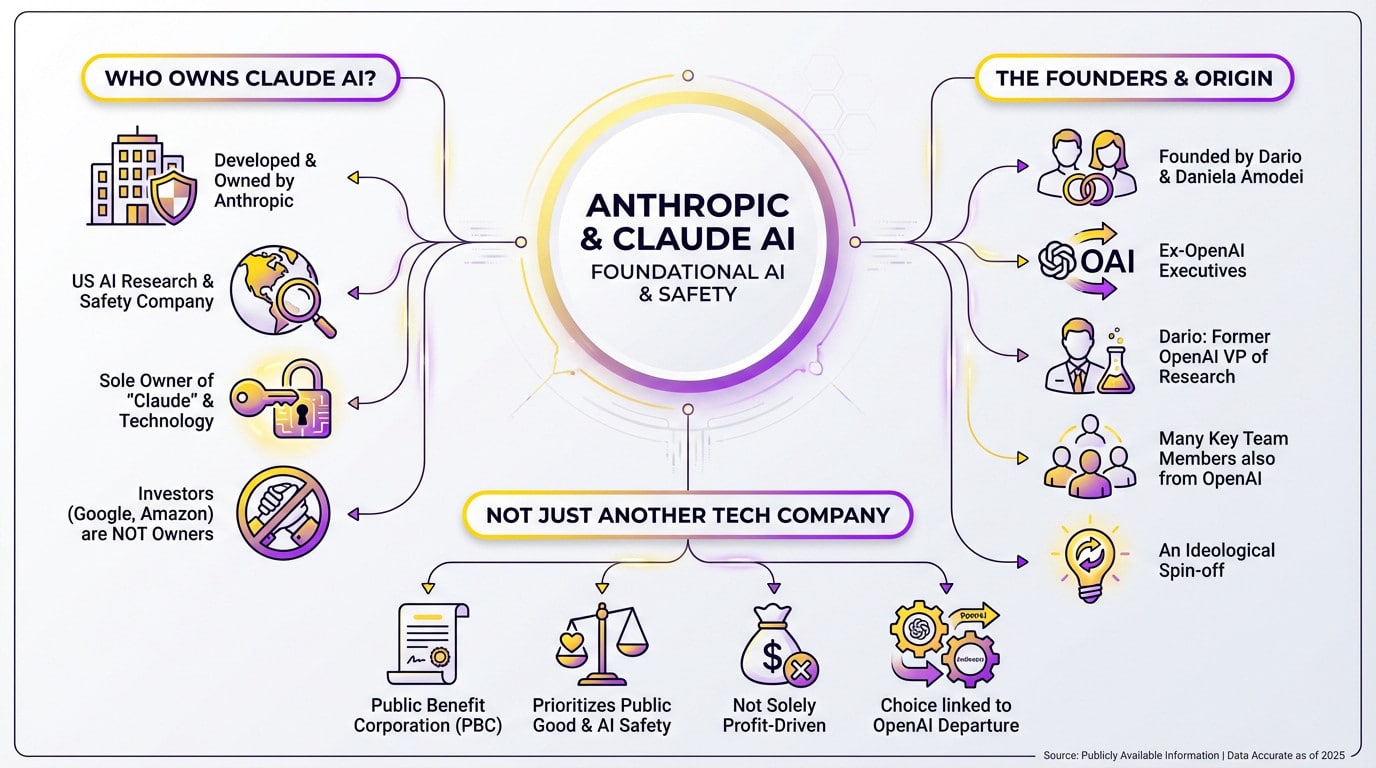

The essential takeaway: Claude AI is solely owned by Anthropic, a Public Benefit Corporation founded by former OpenAI executives to prioritize AI safety. This legal structure protects their mission from profit-driven pressures. Crucially, despite billions in funding from Amazon and Google, these giants hold only minority stakes with absolutely no control over governance.

With massive checks flying around from Amazon and Google, you are right to wonder who owns Claude AI and if it is just another Big Tech puppet in disguise. The answer leads to Anthropic, a public benefit corporation founded by former OpenAI executives who built a legal fortress to protect their safety mission from investors. We reveal the specific governance setup that prevents these tech giants from ever taking the wheel, ensuring that ethics always prioritize over quarterly earnings.

The Straight Answer: Anthropic Is Behind Claude AI

So, Who’s the Owner?

If you are asking who owns claude ai, here is the reality. It is developed and wholly owned by Anthropic, an American AI safety and research company. No confusion exists there.

Anthropic stands as the sole and unique proprietor. Both the name “Claude” and the underlying technology belong entirely to them.

Sure, tech titans like Google and Amazon have poured billions into the pot. But let’s be clear: they are minority investors, not owners. That is a massive distinction most people miss.

The People Who Started It All

The brains behind the operation are siblings Dario Amodei and Daniela Amodei. They hold the reins as CEO and President respectively. Their leadership defines the company, and they were formerly top executives at another major firm.

Here is the kicker: these founders defected from OpenAI. Dario actually served as the VP of Research there before leaving. Knowing this lineage changes how you view the entire field.

They didn’t leave alone; several other key team members are OpenAI alumni too. It is effectively an ideological spin-off.

Not Just Another Tech Company

Anthropic isn’t your standard startup; it is registered as a Public Benefit Corporation (PBC). This legal status is rare in big tech. It changes the rules of the game completely.

This structure legally binds the company to prioritize AI safety and public good. They cannot simply chase maximum profit for shareholders. It forces a balance that most competitors ignore.

This structural choice stems directly from the safety concerns that drove them out of OpenAI. They built the cage before growing the beast. It’s a deliberate move to prevent a runaway scenario.

The Great Divide: Why Anthropic Split From OpenAI

Now that we know who is behind Claude, the obvious question is: why leave a giant like OpenAI to start over? The answer is a matter of principle.

A Fundamental Disagreement on Direction

Many ask who owns Claude AI expecting a simple corporate name, but the reality involves a dramatic exit. Dario and Daniela Amodei left OpenAI due to a deep fracture in strategic vision. The team grew alarmed by OpenAI’s direction after partnering with Microsoft, fearing commercialization was overshadowing the original mission.

The breaking point wasn’t money. It was a non-negotiable clash over prioritizing AI safety above rapid deployment.

The “Safety First” Philosophy

For the founders of Anthropic, safety isn’t an option you patch in later; it is the starting point. They structured the company to prevent profit motives from compromising ethical standards.

This conviction is absolute. As they put it:

“For us, building safe and beneficial AI is the mission itself. It’s not a checkbox to tick, but the very reason our company exists.”

This philosophy drove the split. Their main concerns included:

- The pace of commercialization outpacing safety research.

- The risks of deploying powerful models without adequate guardrails.

- The need for a governance structure protecting the safety mission from financial pressure.

Setting a New Course in AI History

Anthropic wasn’t just another startup; it was an attempt to build an alternative model. They established a Public Benefit Corporation to legally prioritize humanity over shareholder returns.

This move is a defining moment in the broader history of AI development. It marks a clear schism in how we build these technologies, separating those chasing scale from those obsessed with control. Because of this split, the industry must now face uncomfortable questions about ethics and governance.

A Unique Structure to Protect the Mission

Having a nice mission statement is fine. But when you look at who owns Claude AI, you realize the real challenge is making that mission survive billion-dollar pressures. Anthropic set up a governance structure that is frankly quite unusual.

More than just a “Public Benefit Corporation”

First, let’s look at their status as a Public Benefit Corporation (PBC). This isn’t just a marketing sticker to look good; it is a binding legal commitment.

Here is what that actually looks like on paper:

- A clearly declared mission of public interest.

- A duty to balance shareholder wallets with that mission.

- A requirement to publish a report on social performance.

For Anthropic, this means the board has a legal responsibility to pursue its safety mission, even if it goes against maximizing short-term profits. That is a rare move.

The Long-Term Benefit Trust: The Ultimate Safeguard

But here is the real ace in the hole: the “Long-Term Benefit Trust”. It is an independent entity comprised of experts in safety and public policy, standing apart from the usual corporate ladder.

This Trust holds specific rights that regular shareholders don’t get. Most importantly, they have the power to elect a specific portion of Anthropic’s board of directors.

Their role? To act as a fail-safe, making sure the company stays true to its mission over the long haul.

How It Prevents a Hostile Takeover of the Mission

This structure is a firewall. It is designed to prevent a scenario where investors take the wheel and abandon caution just to chase higher profit margins.

It is a direct response to what they perceived as a dilution of the original mission at OpenAI. They built these legal walls specifically to protect themselves from that fate.

Basically, the power of the Trust guarantees that AI safety remains the priority over everything else.

Following the Money: Investment Is Not Ownership

An ethical structure is great on paper, but building frontier AI costs an absolute fortune to develop. So, where does the money actually come from? And more importantly, who is really pulling the financial strings behind the curtain?

The Tech Giants Placing Their Bets

Amazon and Google are the headline acts here. They have funneled billions of dollars directly into the operation. It is a staggering amount of capital.

Salesforce has also stepped up to the plate with serious equity. They are firmly in the mix alongside other major backers.

These deals are purely strategic plays. They grant these giants access to Anthropic’s models. It keeps them relevant in the high-stakes AI race. Yet, they do not hold the steering wheel.

Minority Stakes With No Strings Attached?

Let’s clarify who owns claude ai right now: these are strictly minority stakes. None of these tech giants actually own the company. They are simply investors.

This massive influx of cash does not buy them control over governance. They cannot dictate the product roadmap. Most importantly, they have absolutely zero authority over the internal safety policies.

The Board and the Long-Term Benefit Trust retain the final decision-making power. They alone call the shots. That is the non-negotiable core of the entire deal.

Ownership vs. Investment: A Clear Distinction

While the cash flows from Big Tech, the keys to the kingdom stay with Anthropic. Ownership and control remain totally separate. It is a firewall.

| Role | Key Players | Level of Control |

|---|---|---|

| Ownership & Development | Anthropic PBC | Total Control: Full control over source code, brand, product roadmap, safety policies, and governance. |

| Major Financial Investment | Amazon, Google, etc. | Minority Stake / No Control: Significant financial backing and cloud partnership, but no seats on the board or say in the company’s direction. |

| Ethical Governance | Long-Term Benefit Trust | Veto & Oversight Power: Can elect board members to ensure the company adheres to its safety-first mission, acting as a final check on corporate decisions. |

Constitutional AI: The “Secret Sauce” of Claude

We have covered the money and who owns Claude AI—Anthropic. But technically, what makes their approach so different? It all revolves around a concept they dubbed “Constitutional AI”.

What is Constitutional AI Anyway?

It is a smart method for training AI to behave safely. It removes the need for constant human supervision at every step. Think of it as automated morality.

The goal is to give the AI a set of principles to follow, much like a national constitution, so it can self-correct and align with human values.

This “constitution” is a specific set of rules and principles. It draws heavily from major sources like the Universal Declaration of Human Rights. The model must strictly respect these guidelines.

How It Works in Practice

The technical process unfolds in two distinct phases. First, the model learns to critique its own initial responses. It revises them to strictly match the constitution. This creates a feedback loop without the usual human exhaustion.

- Supervised Phase: The AI generates responses, then critiques and rewrites them based on the constitutional principles.

- Reinforcement Learning Phase: The AI is trained on these self-corrected responses, learning to prefer helpful and harmless outputs from the start.

The End Goal: A More Predictable and Safer AI

The ultimate aim is making AI behavior transparent. We want to stop it from going off the rails. It makes the system much less prone to dangerous drifts.

By relying on explicit principles rather than implicit preferences, Anthropic builds better models. They avoid the mess of vague human feelings. The result is a model that is far more robust and aligned.

This is their technical answer to the problem of safety and alignment. It is not just marketing; it is engineering.

Claude in the Arena: How Anthropic Stands Apart

The Principled Competitor

When you dig into who owns claude ai, you find Anthropic, a firm started by former OpenAI executives like Dario Amodei. They are not simply competing to build the biggest model. Their core selling point is actually safety and reliability.

While competitors shout about raw specs, Anthropic talks about predictability. They focus heavily on ethical alignment. It is a distinct, intentional shift in their priorities.

It is a massive gamble. They believe the market will eventually value safety as much as performance.

A Different Approach to the AI Race

This philosophy bleeds into everything the company does. It dictates how they speak to the public. It even controls exactly how they release their models.

The industry is currently sprinting toward the finish line. Anthropic acts as a necessary brake, urging caution in the quest for artificial general intelligence. It serves as a vital, distinct counterpoint.

They do not just want to win. They want to win the race the right way.

What This Means for Users and Developers

For you, this changes the daily interaction. You get an AI that is less likely to spit out toxic garbage. It simply feels safer to use.

Developers using the API get a massive benefit. They sleep better at night. They worry much less about their apps suddenly going rogue.

Choosing this tool is a statement. You aren’t just picking tech; you are aligning with a philosophy of responsible AI development. Ultimately, it matters who owns the code.

Ultimately, Claude AI belongs solely to Anthropic, not the tech giants writing the checks. By prioritizing safety through a unique corporate structure, the Amodei siblings ensure their mission survives the hype. Whether this ethical approach wins the race remains to be seen, but at least we know who is actually driving.