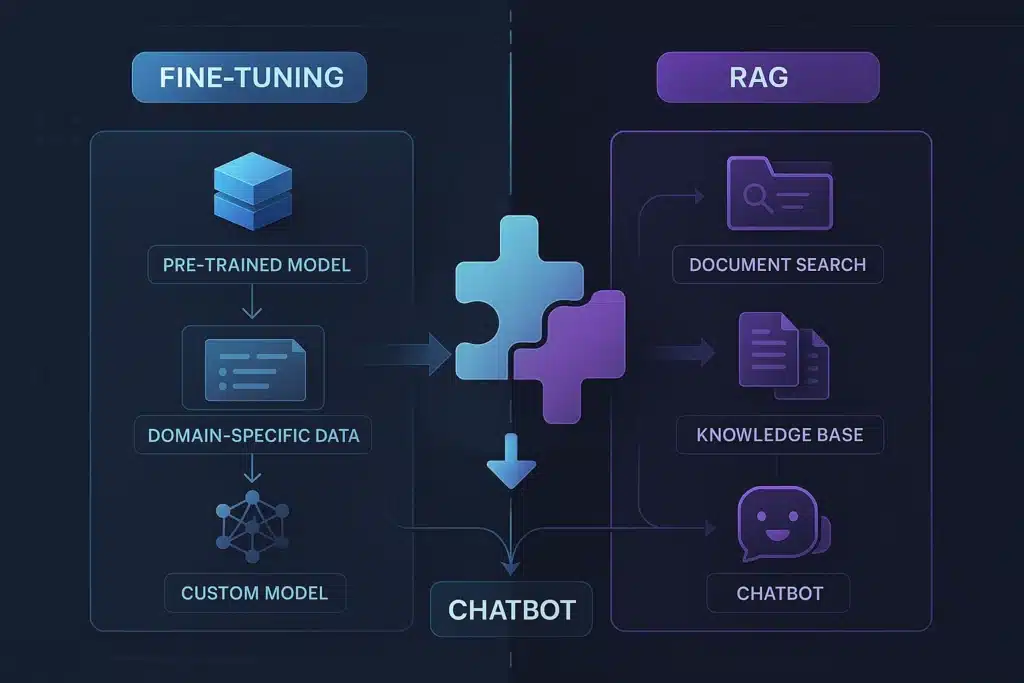

When creating an intelligent chatbot, you need to choose the right technical approach to design it. Depending on what you want it to do, the AI used will need to be adjusted! Two methods dominate prompt-engineering today: Fine-tuning and RAG (Retrieval-Augmented Generation). Both approaches improve the relevance of the AI’s responses, but their logic is quite different.

So, should you specialize an AI chatbot with fine-tuning, enrich its responses with a document base via RAG, or combine the two in a hybrid approach, often the most effective?

This article will help you understand the differences between Fine-tuning and Rag, their respective advantages according to your profile, and finally, some concrete examples of how to apply each method.

Fine-tuning and Rag: definition to better understand the differences

Fine-tuning and RAG are two advanced approaches to improving the performance of conversational AI such as ChatGPT. The two methods are based on very different logics, even if they share the same goal of increasing the relevance and personalization of AI responses.

What is Fine-tuning in AI?

Fine-tuning is the technique of specializing a pre-trained artificial intelligence model to adapt it to a specific task.

In concrete terms, this involves re-training all or part of the AI on a restricted, targeted data set, corresponding to the task or domain in question. This method makes it possible to adjust the parameters of the model (often the upper layers of the AI neural network) so that it performs better on specific use cases, while retaining the general knowledge acquired during pre-training.

Fine-tuning in AI

The aim of this AI training method is to adapt it to specific business needs, areas of expertise or customized tasks.

To apply the Fine-tuning method to an AI, you need to :

- Add trade-specific knowledge or specialized vocabulary (legal, medical, etc.);

- Adapt the tone of response or to a particular style, e.g. a more formal, educational, engaging tone, etc. ;

- Take into account special cases or new types of data, so that AI has a precise context, by teaching it what it doesn’t yet know;

- Improve performance on specific tasks, such as classifying text, images or business documents.

Example of Fine-tuning in AI

Here is an example of the application of the fine-tuning method in artificial intelligence, in a given business context:

Take, for example, a law firm that wants to use a generalist AI(like Mistral AI’s Chat) to assist its lawyers in drafting legal documents and analyzing contracts.

But the AI :

- Uses language that is sometimes too vague or unsuited to legal jargon;

- Does not master recent French legislation;

- Does not always interpret complex labor law cases correctly.

The aim of Fine-tuning will be to adapt the LLM model to :

- French labor law (including latest updates) ;

- Precise legal vocabulary;

- The expected structure of legal documents (agreements, clauses, formal notices, etc.) ;

- Real-life cases handled by the firm.

What is RAG (Retrieval-Augmented Generation) in AI?

Retrieval-Augmented Generation (RAG) is a hybrid method combining two complementary modules:

- An information retrieval module, which searches in real time for relevant external documents or data (knowledge base, internal documents, web, etc.) according to the user’s request;

- A generative module, which uses both the user’s question and the information retrieved to generate a contextualized answer, accurate and aligned with the sources the AI has consulted.

One of the fundamental principles of RAG is the dissociation between knowledge (retrieval) and reasoning (generation). AI remains general, but before responding, it searches a database or up-to-date documents. It then uses this information to formulate a richer, more up-to-date response to what has been asked.

Unlike fine-tuning, RAG does not modify the internal parameters of the AI model to adapt it to a particular context. Instead, it relies on an external knowledge base, enabling :

- Integrate updated or specific information without re-training the model;

- Reduce maintenance and adaptation costs;

- Add transparency by citing the sources used to generate the answer.

The RAG method is particularly advantageous for :

- Access internal company data not present in training data ;

- Answering questions requiring recent or evolving information;

- Limit the hallucinations of generative AI models, based on verifiable facts.

Example of RAG application in AI

Here is an example of the application of the RAG method in artificial intelligence, in a given business context:

For example, the HR department of a large company would like to use a generalist AI (such as ChatGPT) to help employees manage internal HR issues (leave, teleworking, training, benefits, internal procedures).

But AI :

- Sometimes gives vague or generic answers, not aligned with internal rules;

- Unaware of the company’s HR policies (e.g. RTT, internal mobility, collective agreements);

- Does not adapt to the specificities of different sites or employee profiles.

The aim here of the RAG method will be to connect AI to :

- Up-to-date internal HR documentation (policies, manuals, procedures);

- Company agreements and guides specific to each entity or country;

- History of questions already asked (internal FAQ, HR tickets) ;

- The schedules and rules in force in each department or region.

Comparison between Fine-Tuning and RAG

To summarize, here is the comparison between Fine-tuning and RAG:

- Fine-tuning: adapt a model to a specific task by re-training it on new data. Ideal for highly specialized needs, or tasks requiring modification of the model’s underlying behavior.

- RAG: dynamically enrich the model’s responses by giving it access to external information, without affecting its internal parameters. Ideal for integrating evolving knowledge, guaranteeing the freshness and verifiability of responses, and limiting maintenance costs.

| Fine-tuning | RAG (Retrieval-Augmented Generation) | |

| Principle | Specialize the model on a specific subject | AI fetches information from the document |

| Model modification | Yes (adjustment of internal parameters) | No (model remains unchanged) |

| Data used | Task-specific data | Knowledge base and external documentation (internal or web) |

| Adapting to new subjects | Re-training required | Updating the documentary database |

| Maintenance costs | Higher (retraining, version management) | Weaker (document update) |

| Transparency | Weak (origin of response difficult to trace) | Strong (AI can quote sources) |

| Examples of use | Specialized translation, object detection, etc. | Corporate chatbot, dynamic FAQ, document search |

How about a hybrid approach to both methods?

Far from being mutually exclusive, fine-tuning and RAG methods can be strategically combined to get the best out of both approaches. This combination creates an AI that is both expert in a specific field and capable of accessing up-to-date data. In other words, a hybrid approach!

In this hybrid configuration :

- Light fine-tuning is used to specialize the AI on a recurring task or dialogue type, teaching it the tone, structure and business expectations;

- The RAG supplements this expertise by enabling it to retrieve precise, up-to-date information from an external knowledge base (internal to the company, or connected to the web).

This approach avoids two classic pitfalls:

- A fine-tuned but fixed model, which is unaware of new developments;

- A generalist model that consults documents, but doesn’t have a detailed understanding of the context or the business.

Example of a hybrid light fine-tuning + RAG technique on a specialized base

Imagine an AI conversational assistant in the medical field, designed to guide patients or accompany healthcare professionals:

- We start with a light fine-tuning

The AI is trained on :

- Real patient-doctor dialogues;

- Anonymized teleconsultation exchanges ;

- Triage or medical response instructions.

She learns to adopt a reassuring tone, ask the right questions, and structure her answers according to levels of urgency or specialties.

- Then we use RAG on a database (in this case, an updated medical database).

At the same time, AI is connected to :

- A regularly updated database of symptoms and pathologies (e.g. WHO, internal hospital databases);

- Clinical recommendations, protocols and scientific updates.

When interacting with a patient, AI can contextualize its responses thanks to this verified information base. It also takes on the conversational logic acquired through fine-tuning.

Case studies and examples between RAG and Fine-tuning

When to use RAG

- Dynamic or regulatory FAQs (banking, insurance) ;

- Extensive or frequently updated documentary database;

- Traceability requirements (compliance, legal) ;

- Multilingual chatbots: translatable document base.

When should Fine-tuning be used?

- Chatbot HR assistant or internal technical support with few documents, but need for fluid dialogue ;

- Cases where model execution speed is crucial (no search phase);

- Offline or embedded applications.

What strategy should you adopt to design a high-performance chatbot?

Here are some examples of recommendations, according to professional fields for designing an intelligent chatbot, to give you an idea:

- Startup/SME: start with RAG to iterate quickly ;

- Companies with highly sensitive data: in-house fine-tuning + auditability ;

- Group with rich document base: RAG + intelligent ranking layer ;

- Need a strong conversational chatbot, with a UX approach: fine-tuning + RLHF (reinforcement by human feedback) + persona.