The bottom line: Artificial General Intelligence (AGI) remains a theoretical goal distinct from today’s “narrow” AI tools. While current models excel at specific patterns, true AGI would possess the human-like ability to generalize knowledge across any domain without prior training. Understanding this massive gap clarifies why, despite impressive chatbots, a machine capable of truly possessing common sense is still the industry’s holy grail.

Do you feel overwhelmed by the constant headlines predicting a robot apocalypse, especially when your own smart devices still struggle to understand the simplest voice commands? You are likely searching for what is agi because the sudden rise of generative tools has blurred the distinct line between a clever calculator and a machine that truly thinks like a human. We will strip away the confusing marketing hype to reveal exactly why Artificial General Intelligence remains the theoretical holy grail that tech giants are chasing, and why it is completely different from the software we use today.

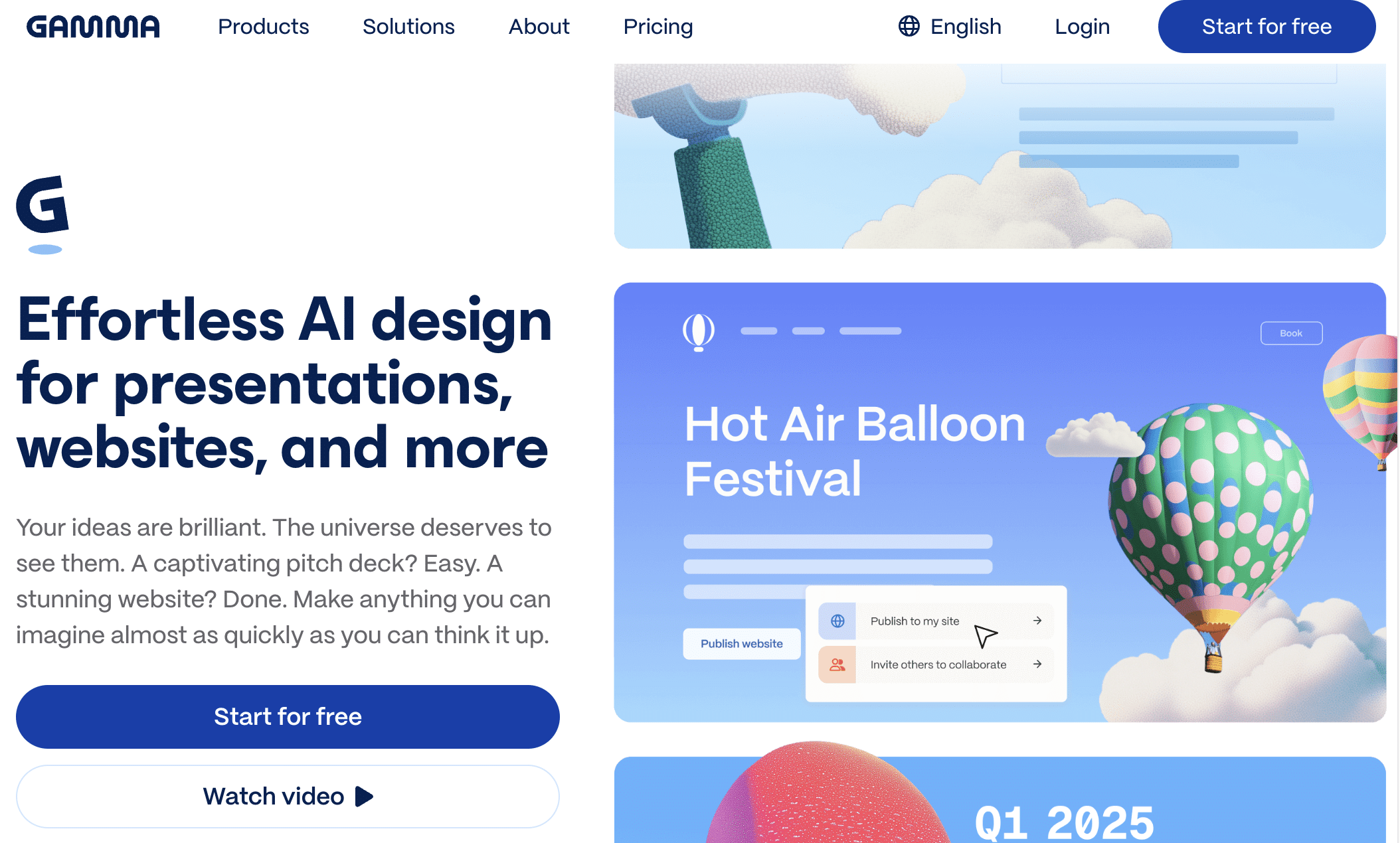

A Tale of Two AGIs: Clearing Up the Confusion First

The Acronym Mix-up: AI vs. Taxes

You might be scratching your head right now. It is messy because “AGI” stands for two wildly different concepts. One determines your yearly tax bill, while the other aims to replicate the human mind.

In the financial world, AGI stands for Adjusted Gross Income. It is that crucial number on your IRS Form 1040—specifically line 11—calculated by taking your total income and subtracting deductions like student loan interest. It is purely a financial metric.

But listen, this article isn’t about the IRS. We are strictly focusing on the other definition: the technological holy grail.

Setting the Stage for Artificial General Intelligence

So, what is agi in the tech sphere? It stands for Artificial General Intelligence. Think of it as the theoretical “Holy Grail” of computer science—a system capable of learning any intellectual task that a human being can do.

Experts often refer to this as “strong AI.” We aren’t talking about a calculator here; we mean a machine that doesn’t just execute code but actually “thinks” and generalizes knowledge across different domains.

Stick around, because we need to unpack exactly what that capability implies for us.

Why This Distinction Matters More Than Ever

The explosion of Generative AI tools like ChatGPT has shoved this term into the spotlight. Suddenly, everyone assumes we have arrived at the finish line, creating a dangerous fog of confusion between advanced tools and actual thinking machines.

Understanding this difference is the only way to have an intelligent conversation about our future. Mistaking current chatbots for genuine autonomous intelligence is a trap you want to avoid if you want to stay credible.

Let’s be clear: what we have today is impressive, but it’s not AGI. Not even close. And understanding why is the whole point.

The Promise and the Hype

Achieving this level of cognition is the stated long-term mission for giants like OpenAI and Google DeepMind. It is the driving force behind billions of dollars in current R&D spending.

While it sounds like pure sci-fi, this is an active field of theoretical research. I emphasize “theoretical” because, despite the hype, we haven’t cracked the code on flexible, human-level reasoning just yet. It remains an academic pursuit.

To truly grasp the stakes of this race, we must first pin down exactly what we are trying to build.

So, What Is Artificial General Intelligence, Really?

The Core Idea: An AI That Can Do Anything You Can

Let’s cut through the noise. What is AGI exactly? It represents a theoretical machine possessing genuine human-level cognitive abilities. Basically, it could learn and execute any intellectual task you or I can handle without breaking a sweat.

Here is the real kicker. It does this without specific prior training for every single new task. That “General” part is the only thing that actually matters here.

Think of it this way. Current tech is just a specialized screwdriver, while AGI is the ultimate Swiss Army Knife.

The Stark Contrast With Narrow AI

Now, look at Narrow AI, often called weak AI. This makes up 99.9% of what we use today. These systems are built for one single, solitary task. They handle things like image recognition or translation only.

You see the limitation? An AI that generates stunning images cannot write a single line of code. It certainly cannot analyze complex financial data. It remains completely stuck inside its original training domain.

Even advanced generative models are just Narrow AI. They are versatile, sure, but they remain limited.

Beyond Pattern Matching: True Understanding

AGI goes way beyond simple pattern matching. It implies a deep form of contextual understanding and logic. It actually reasons through problems instead of just guessing the next word.

The ultimate goal is not just to perform tasks, but to understand and learn any intellectual task a human can, without being explicitly programmed for each one.

It is the difference between reciting a book and truly understanding it. That is the gap.

The Ability to Generalize: The Holy Grail

Generalization is the absolute main characteristic of AGI. This is the capacity to transfer knowledge from one domain to a completely different one. It takes skills from A and applies them to B.

Humans do this constantly. Learning to ride a bike helps you learn a motorcycle later. An AGI should make similar cognitive links.

This is precisely what current AIs cannot do. They fail here.

The Building Blocks of a Thinking Machine

More Than Just a Powerful Calculator

It is easy to fall into the trap of thinking AGI is just about raw processing speed, but that misses the point entirely. Real intelligence isn’t about crunching numbers faster; it is about qualitative cognitive capabilities that adapt to chaos. We aren’t looking for a better calculator here, but a mind that actually understands context.

To truly answer what is agi, we have to look at the specific human traits we are trying to mirror. We are talking about reasoning, planning, complex problem-solving, and abstract thought. It is the ability to learn rapidly from almost nothing, not just regurgitating data it was fed during training.

The Core Cognitive Toolkit of an AGI

So, what exactly is in the box? Researchers argue about the specific specs, but there is a general consensus on the “starter kit” required for a machine to think. It is not an exhaustive list, but without these tools, you are just building a fancy chatbot.

Here is what the experts say is non-negotiable for a system to claim general intelligence:

- Reasoning and problem-solving: The ability to strategize, plan, and think abstractly to solve unfamiliar problems.

- Knowledge representation: Building a rich, internal model of the world, including common sense and social norms.

- Learning from experience: Rapidly acquiring new skills and knowledge from small amounts of data, just like humans do.

- Natural language understanding: Not just processing text, but truly grasping nuance, context, and subtext in communication.

- Creativity: The capacity to generate ideas or artifacts that are novel and valuable.

Common Sense: The Missing Ingredient

Here is the kicker: common sense is incredibly hard to code, yet it is the glue of intelligence. It is that implicit knowledge we all have—like knowing water makes things wet or that you can’t be in two places at once. Machines don’t just “get” this stuff naturally.

Current AI models fail hard here. You might ask a system about physical objects, and it could confidently tell you something logically possible but totally absurd in reality. It is completely disconnected from the physical rules we live by.

Simply put, without this grounding in reality, there is no “general” intelligence. We just have a very expensive parrot.

Self-Awareness and Consciousness: The Final Frontier?

Now we hit the philosophical wall. Does a machine need to be awake to be smart? It is a massive debate. Some argue an AGI needs to “feel” to be general, while others say performance is all that matters.

We have to separate intelligence—solving problems—from consciousness, which is the subjective experience. Most researchers are chasing the first one because, frankly, the second is a mystery that even neuroscientists haven’t cracked yet.

How Would We Even Know? The AGI Litmus Tests

Defining what is agi is great for textbooks, but how do we actually prove a machine has reached that level without just taking its word for it?

The Classic: The Turing Test

You have likely heard of the Test de Turing, the most famous benchmark in history. The premise is deceptively simple: if a human judge has a conversation with a machine and another human, can they tell which is which?

But here is the problem with that standard today. Modern LLMs can “pass” this test simply by being world-class mimics, predicting the next word without understanding a single thing they say.

The Turing test measures the ability to deceive, not true intelligence.

More Modern (and Practical) Benchmarks

Since fooling a human judge is getting too easy, researchers like Steve Wozniak and Nils Nilsson proposed concrete tests that require actual, messy problem-solving.

- The Coffee Test: Can the machine walk into an average American home, figure out how to make a coffee, including finding the coffee machine, the mug, and the water?

- The IKEA Test (or Robot College Student Test): Can the AI assemble a piece of IKEA furniture from instructions, or enroll in a university and successfully obtain a degree?

- The Employment Test: Can an AI perform a human job so well that it’s economically viable and indistinguishable from a human employee?

What These Tests Really Measure

These tests share a common thread: they do not care about chat logs, but rather the capacity to act in the real world. It is about taking vague instructions and manipulating physical objects to get a specific result.

They test generalization, planning, and physical interaction—skills that are infinitely harder to fake than writing a poem. They force the AI to use sensory perception and motor skills, bridging the gap between code and reality.

The Problem With a Single Finish Line

We tend to treat intelligence like a light switch—it is either on or off. But it is really a spectrum, and there probably won’t be a single “Aha!” moment where we officially declare AGI has arrived.

The emergence of AGI will likely be a gradual process, with systems getting increasingly capable at wider ranges of tasks. That is why relying on a single finish line is a mistake.

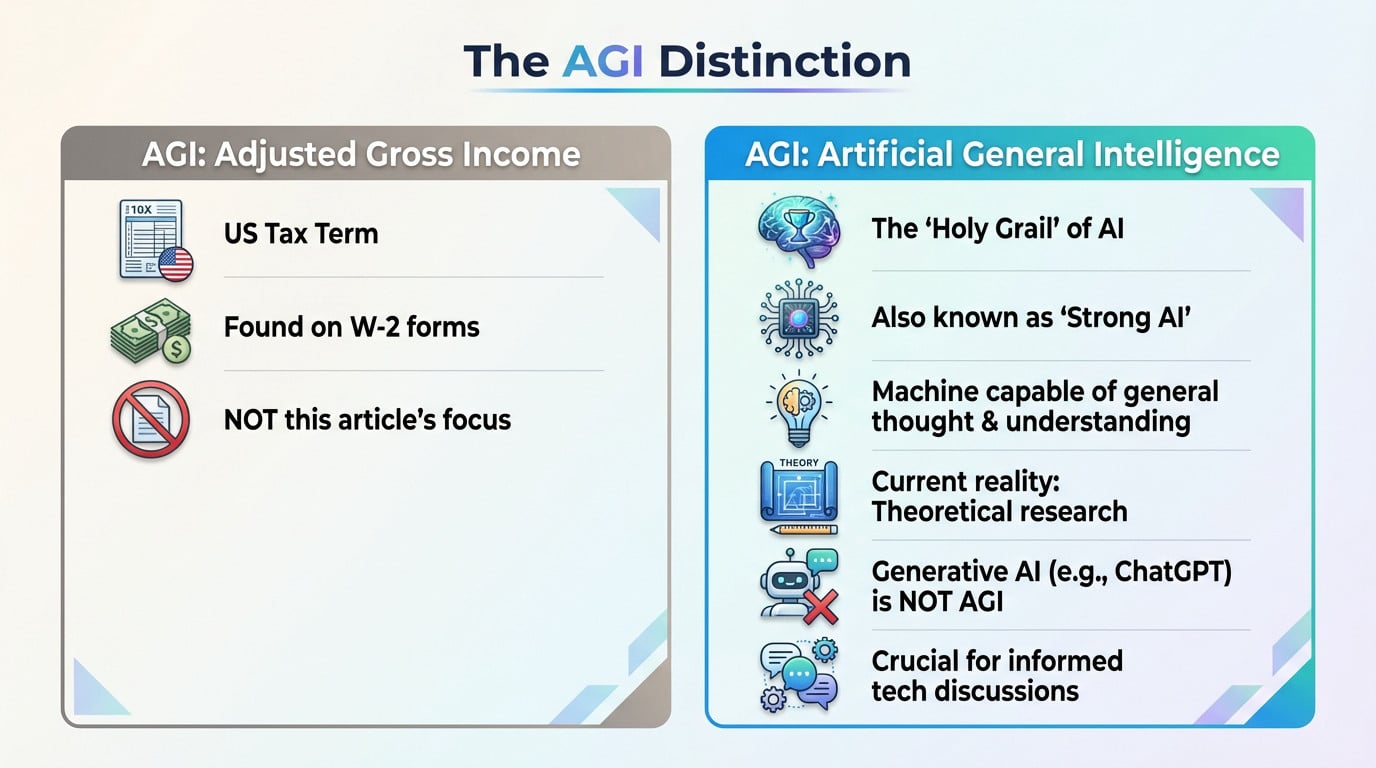

The Spectrum of Intelligence: Where Today’s AI Fits In

Since there isn’t a magical “on/off” switch for AGI, how do we actually rank progress? Researchers have proposed a framework to clear up the mess.

From Narrow to General: A Gradual Path

Researchers, particularly from Google DeepMind, proposed a taxonomy that ditches the simple “smart or dumb” label. Instead, they classify AI systems on a spectrum of generality, helping us pinpoint exactly where a machine sits on the intelligence ladder.

This model is a breath of fresh air because it moves us past the binary ANI vs AGI argument. It offers a nuanced snapshot of current research, acknowledging that intelligence isn’t black and white—it’s a gradient.

Levels of AGI Performance and Autonomy

Think of the following comparison as a reality check. It visualizes the massive gap between the AI tools we use daily and the theoretical powerhouse that is true AGI.

| Category | Narrow AI (ANI) | Emerging AGI (e.g., Advanced LLMs) | True AGI (Theoretical) |

|---|---|---|---|

| Scope | Single, specific task (e.g., playing chess, identifying cats). | Multiple tasks within a domain (e.g., writing, coding, summarizing) but requires specific prompting. | Any intellectual task a human can perform, across any domain. |

| Learning | Trained on a specific dataset. Cannot learn outside this scope without retraining. | Pre-trained on vast data. Can perform zero-shot/few-shot tasks, but doesn’t truly learn new skills autonomously. | Learns continuously and autonomously from experience, transferring knowledge between domains. |

| Generalization | None. Knowledge is not transferable. | Limited. Can apply patterns to similar problems but fails on fundamentally new concepts. | Deep generalization. Can apply abstract principles learned in one area to a completely novel one. |

| Common Sense | Zero. No understanding of the physical or social world. | Simulated. Can recite common sense facts from data but lacks genuine understanding. | Innate. Possesses a rich, implicit model of how the world works. |

| Autonomy | Tool. Requires direct human command to operate. | Consultant/Tool. Can execute complex instructions but lacks independent goals or initiative. | Agent. Can set its own goals and act autonomously to achieve them. |

So, Are LLMs a Form of AGI?

Let’s be blunt: no, LLMs are not AGI. Based on the framework, they fit squarely into the “emerging” AGI bucket. They display competence in specific areas but remain incomplete, lacking the autonomy to function without us holding their hand.

Here is my take: they are incredibly sophisticated stochastic parrots. They are masters of statistical imitation, predicting the next word with eerie accuracy, yet they don’t understand a single thing they say. It’s mimicry, not mind.

The Theoretical Paths to Building AGI (Without the Blueprints)

We know exactly what the destination looks like—a machine with human-level cognition. But the map to get there? That is where the experts start arguing. Here is how researchers are actually trying to build it.

The Symbolic Approach: Top-Down Logic

Think of this as the “Good Old-Fashioned AI” method. It treats intelligence like a giant flowchart. Programmers hard-code explicit rules into the system, essentially teaching it to think using strict “if… then…” logic statements to represent the world. It relies on symbols humans can actually read.

It works beautifully for chess or algebra—places where the rules never change. But throw it into the messy real world? It crumbles. You can’t write a rule for every nuance of a conversation or a chaotic street scene. It’s just too rigid to handle ambiguity.

The Connectionist Approach: Bottom-Up Emergence

This is the heavyweight champion right now. Instead of programming rules, the connectionist approach tries to mimic the physical structure of the human brain using artificial neural networks. It focuses on adjusting connections between neurons to learn from data, effectively building a digital brain.

Real intelligence “emerges” from the interaction of millions of simple neurons processing information together, rather than following a script. This concept is the engine behind modern Deep Learning, allowing systems to spot patterns we miss.

It is incredibly powerful, sure. But it creates a “black box”—we often have no clue how the AI actually reached its conclusion.

Other Schools of Thought

If you think it’s a simple binary choice between symbols and neurons, think again. Researchers are exploring other wild avenues to crack the code of what is agi.

- Hybrid approach: Why choose sides? This method attempts to combine the structured reasoning strengths of symbolic logic with the raw data learning power of connectionist methods.

- Whole-body architecture: Some argue intelligence isn’t just code in a box. This theory suggests AGI needs a physical body to interact with the world—embodied cognition.

- Universalist approach: This is the hunt for a single, theoretically perfect computational algorithm that solves intelligence once and for all, regardless of the specific task.

The Consensus? There Is No Consensus

Here is the cold hard truth: none of these have delivered AGI yet. It remains a massive interdisciplinary puzzle, pulling in computer science, neuroscience, and cognitive psychology. We are still guessing at the solution.

The truth is, nobody knows which path leads to the holy grail. It’s likely going to be a messy mix of all these, with a fundamental discovery or two we haven’t even dreamt up yet.

The Biggest Hurdles on the Road to AGI

The path is far from clear. To truly understand what is AGI, we must look at the colossal obstacles that remain.

The Transfer Learning Problem

We hit a hard wall with the transfer learning problem. This is arguably the toughest technical beast to tame. Current models are simply incapable of applying knowledge flexibly. They freeze up completely when the context shifts slightly.

Here is the catch: they learn statistical correlations, never cause-and-effect relationships. They guess the next word based on math, not actual understanding. It is mimicking, not reasoning.

Without fixing this, an AI remains a hyper-specialized tool, no matter its speed. It is just a parrot with a better memory.

The Ghost in the Machine: Emotion and Creativity

Then there is the void of emotional intelligence and authentic creativity. Algorithms can simulate art or mimic sadness, sure. But it is a hollow imitation scraped from existing data. They do not feel; they just process patterns.

This brings us to a humbling realization about our silicon creations.

True creativity and emotional intelligence remain the ghost in the machine; a puzzle that current systems, for all their power, have yet to solve.

So, ask yourself this: can intelligence without emotions truly be “general”? I seriously doubt it.

Sensory Perception and Physical Interaction

We often underestimate the weight of sensory perception. Real human intelligence is shaped by our physical collisions with the world: the touch, the taste, the smell. We learn by doing, not just by reading static text.

Current AIs are essentially “brains in a jar,” totally cut off from physical reality. While robotics and computer vision are improving, they remain far from human finesse. They see pixels, not the world.

The Data and Energy Problem

Finally, consider the practical nightmare: the astronomical data and energy required to train these models. It is shockingly inefficient compared to a human child. We are essentially burning down forests just to light a single candle.

The reality is, AGI demands a massive breakthrough towards data-efficient learning. We need systems that learn rapidly from a few examples. Brute force computation simply will not get us there.

Ultimately, AGI represents the final frontier of computer science—a machine that truly understands. While we’re still far from that reality (and thankfully, safe from robot overlords for now), the journey is fascinating. Just remember: if it asks for your W-2 form, it’s probably the wrong AGI.