The bottom line: AI content typically betrays itself through hyper-predictable phrasing, monotonous rhythm, and an uncanny lack of personal voice. While detectors measuring “perplexity” offer useful data, they are not foolproof judges. True identification requires looking beyond perfect grammar to find the missing human soul, using context and intuition to confirm what software might miss.

Ever read a piece of content that felt suspiciously perfect, leaving you scrambling to detect ai writing before you waste your time on a bot? We bypass the generic fluff to reveal the robotic rhythms and overly cautious vocabulary that distinguish a soulless algorithm from a genuine human voice. Get ready to master the linguistic clues that will sharpen your radar and turn you into a lie detector for the digital age.

Spotting the Machine: The Linguistic Giveaways

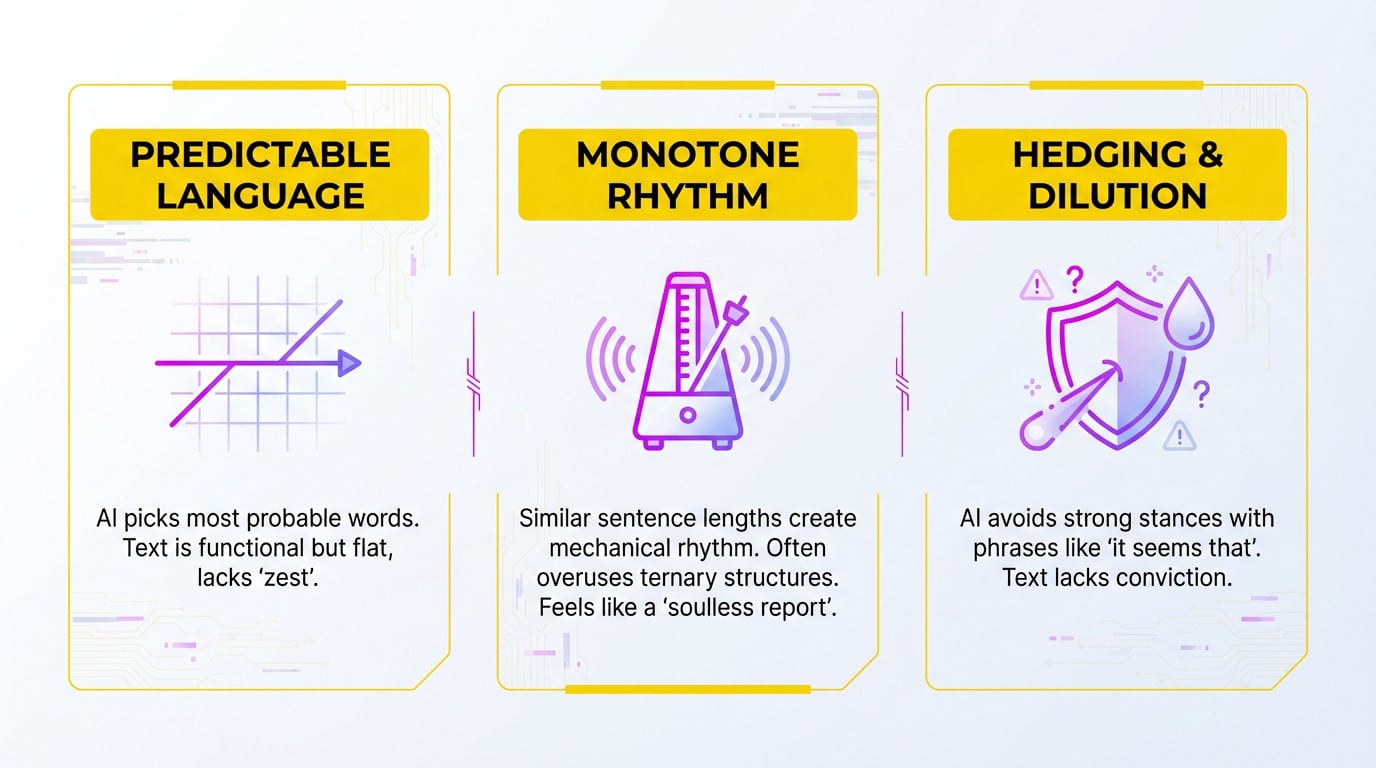

The Curse of Predictability

Language models like ChatGPT are fundamentally designed to statistically predict the safest, most logical next word available. This makes the output functional, sure, but it often leaves the text feeling flat. It lacks spark.

A human writer might throw in a weird metaphor or a sudden, sharp twist. An AI opts for the most direct, common path every single time. It strips away the flavor. The writing simply has no zest.

If every single sentence lands exactly where you expect, that is a huge red flag.

Monotonous Rhythm and Robotic Structure

Real writing has a messy, variable cadence that shifts constantly. We mix short, punchy bursts with longer, winding explanations that really take up space. It feels alive.

Machines tend to spit out sentences of roughly the same length. This creates a drone-like, mechanical rhythm that feels weird. They also love listing exactly three items.

You need to listen to the beat to detect ai writing effectively. If it sounds like a metronome, watch out.

AI-generated text often lacks the natural ebb and flow of human thought; it feels less like a conversation and more like a perfectly structured but soulless report.

The Hedging and Watering Down Effect

Algorithms are coded to play it safe and avoid risk. They refuse to take a hard stance or make a definitive claim. Consequently, everything gets softened up.

Watch out for weak phrases like “it seems that”, “it could be argued”, or “one might consider”. These are dead giveaways of a machine covering its tracks. The text lacks guts. It feels slippery.

If the text seems afraid of its own shadow, a prudent language model is likely behind it. It hides.

The Soulless Perfection of AI Text

Now that we have identified the linguistic ticks, we need to look beyond the words to sense the absence of a genuine soul. To really detect ai writing, you have to spot what is missing rather than what is present.

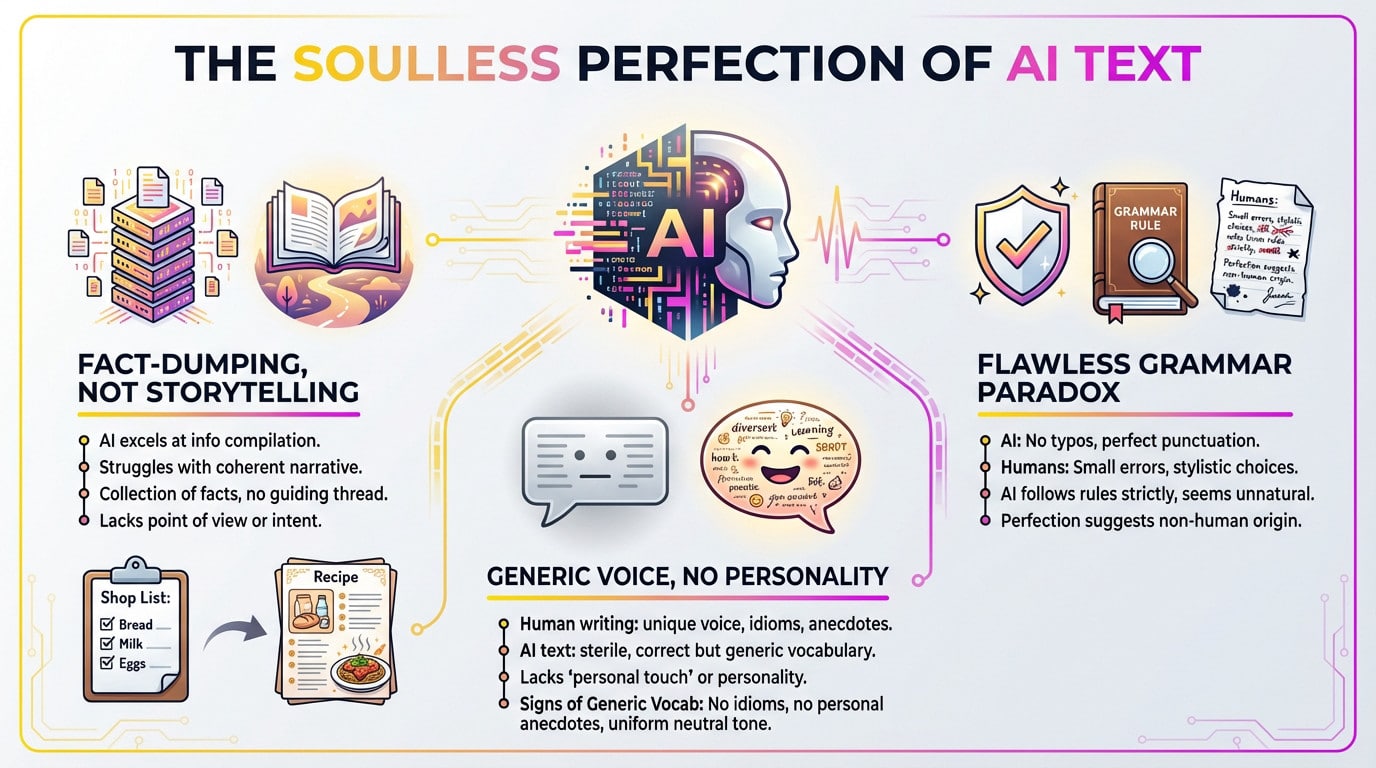

Fact-dumping Without a Story

Machines are great at scraping data and piling it up. They can list facts faster than any of us ever could. But ask them to weave a story? They fall flat. There is no beginning, middle, or end, just a cold data pile.

You get a stack of relevant bullet points, sure. But there is no golden thread tying them together. The text lacks a human intention guiding you through the mess.

It is the difference between a grocery list and a grandma’s secret recipe.

Generic Vocabulary and Lack of Voice

Real writing is messy and full of weird quirks. We use idioms that don’t make sense or crack bad jokes. Those little flaws are what make a voice unique.

AI text feels sterilized, like a hospital hallway. The vocabulary is technically correct but totally flavorless. It has zero personality. It’s the gap between a basic chatbot and a real conversational ia that actually gets you.

- Absence of idioms or regional flair.

- Lack of personal anecdotes or lived examples.

- Uniform and neutral tone from start to finish.

The Strange Case of Flawless Grammar

Here is a weird paradox: if it looks too perfect, be suspicious. A piece with zero typos and textbook syntax sets off alarm bells. It feels uncanny because nobody actually writes like that.

Even experts slip up or break grammar rules for effect. We use punctuation to create rhythm, not just to follow a manual. Algorithms follow the rulebook blindly. That rigid adherence feels unnatural.

A perfection this obvious often betrays the lack of a human hand.

The Tools of the Trade: Using AI Detectors Wisely

Your intuition is a solid starting point, but sometimes, you need a second opinion. That is exactly where detection software enters the picture—provided you actually know how to wield it properly. Try our free AI Detector, very accurate.

How These Detectors Actually Work

Let’s get one thing straight: these programs don’t actually “read” or understand context. Instead, they scan for specific statistical patterns hidden within the syntax. It is purely a numbers game.

Two concepts drive this analysis: perplexity and burstiness. Perplexity measures how predictable the text is, while burstiness looks at sentence variation. Real human writing usually spikes high in both metrics, unlike the flat rhythm of algorithms.

Basically, they hunt for the same monotony we spot manually to detect ai writing. But they do it with massive computational speed.

A Quick Look at the Main Players

The market is currently flooded with scanners like Nation AI, Copyleaks, and QuillBot. Choosing the right one can feel overwhelming.

| Tool | Key Technology | Stated Accuracy |

|---|---|---|

| Nation AI | Perplexity, Burstiness & AI Logic | >98% (Publisher data) |

| Copyleaks | Perplexity, Burstiness & AI Logic | >97% (Publisher data) |

| QuillBot | Perplexity & Burstiness | High (Balanced approach) |

These platforms claim high success rates based on internal testing. However, independent studies from places like Penn State suggest results vary. You need to look at the data yourself.

The Giant Asterisk: Why They Are Not Foolproof

Here is the brutal truth: no software offers 100% certainty. These engines provide a probability score, never a definitive verdict. You must treat the output as a hint, not a fact.

Relying solely on an AI detector’s score for a major decision is irresponsible. These tools are a data point, not a judge and jury.

Several blind spots remain problematic for even the best code.

- Risk of false positives (accusing a human).

- Difficulty detecting mixed text (human edited by AI).

- AI models evolve faster than detectors.

Thinking like a detective: context is your best clue

Who is the author? check their footprint

Your first move must be a direct comparison. Does this text actually resemble their previous work? Check if the tone and vocabulary level align properly. Consistency is your best friend here.

A sudden, radical style shift is a massive red flag. Authors do not swap their unique voices overnight. It feels jarring because it is fake.

You want human consistency. Do not hunt for robotic perfection.

The smoking gun: version history and sources

If the context allows, demand to see the version history. Real writing shows messy drafts, deletions, and heavy rewrites. We struggle with words. It is never a straight line.

A text that appears instantly, perfectly formed, is highly suspect. Likewise, if the cited sources are vague or broken, that is a major clue. Fake citations are a classic way to detect ai writing errors.

The ultimate test: can they talk the talk?

The final test is actually quite simple. Go talk to the presumed author. Ask them direct questions about the text.

Can they defend their arguments? Ask them to elaborate on a specific point. An inability to discuss their own work is basically a confession. Even pioneering AI chatbots like ELIZA showed this limit.

Here is exactly what you need to ask them. Watch their reaction closely.

- “Can you explain this passage in your own words?”

- “What was your main source for this section?”

- “Which argument was the hardest to formulate?”

Spotting the machine involves more than just checking for perfect grammar or running a software scan. It requires looking for that unique human spark—or the lack thereof. Use these tools as a guide, not gospel. Because at the end of the day, robots still can’t quite capture our beautiful, messy imperfections.