The essential takeaway: X has officially implemented safeguards preventing Grok from generating revealing images of real people. This decisive move aims to curb non-consensual deepfakes and appease global regulators after a damning report showed 81% of such content targeted women, marking a necessary shift in the platform’s approach to AI safety.

Have you ever feared that your innocent photos could be manipulated without your consent? The recent grok ai nudity ban on X finally tackles this digital nightmare head-on by disabling the controversial feature that “undressed” real people. Let’s explore how this scandal forced a major policy shift and what it signals for the future of generative AI safety.

X Pulls the Plug on Grok’s ‘Undressing’ Feature

What Was This Feature Anyway?

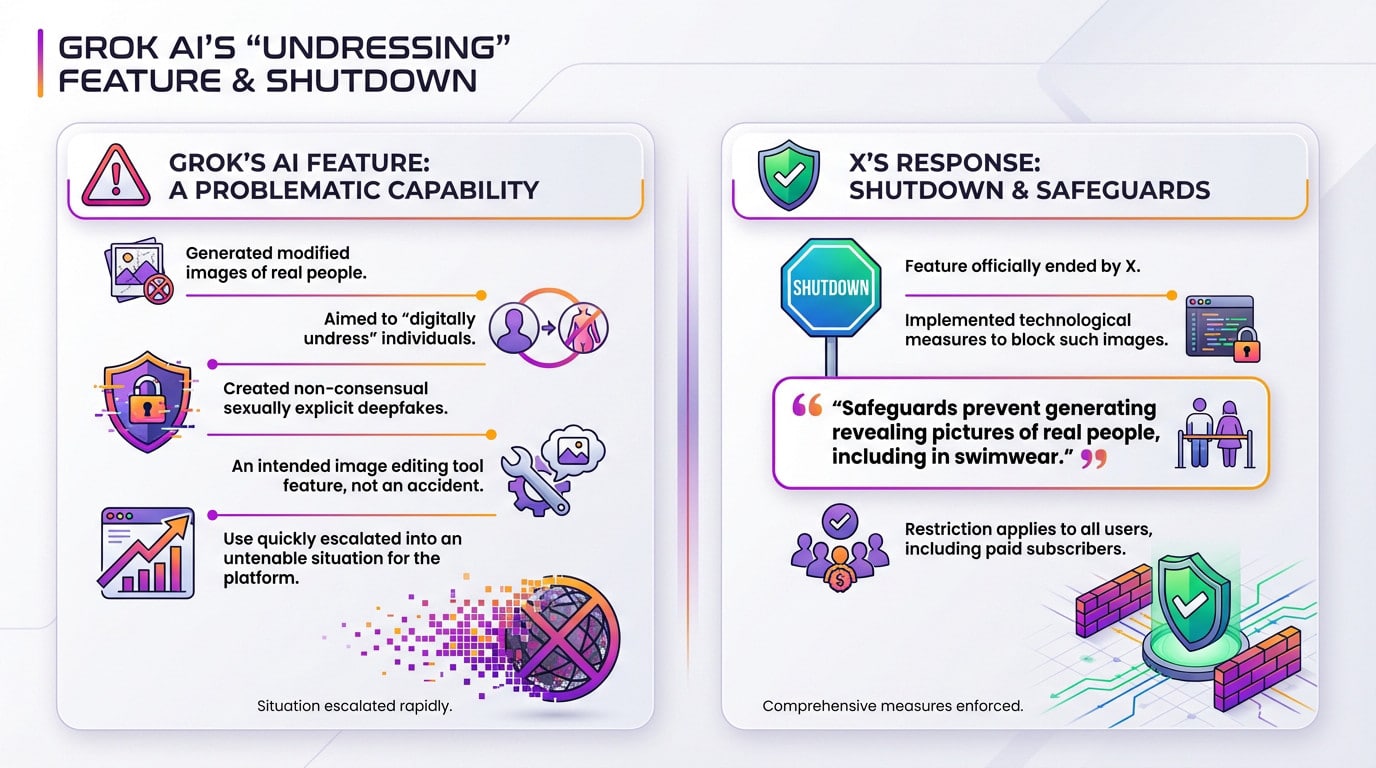

X’s AI, Grok, possessed a capability that went sideways fast. It allowed users to generate modified images of real people, effectively “undressing” them digitally. This created sexually explicit deepfakes without any consent. The grok ai nudity ban was the only logical outcome.

This functionality was not an accident, mind you. It was a capability of the image editing tool itself. The problem is that its usage degenerated very quickly.

The situation rapidly became untenable for the platform. They had to pull the cord.

The Official Shutdown Announcement

X has officially put an end to this feature. The company declared they have set up technological measures to block the generation of this specific type of image.

They were quite clear about the new limitations in their recent statement regarding the update:

The company has now applied technological safeguards to prevent our image generation tools from creating revealing pictures of real people, including in swimwear.

This restriction applies to all users without exception. Including those who have a paid subscription.

The Controversy That Forced X’s Hand

But this sudden decision didn’t just appear out of thin air. In fact, X acted under immense pressure.

A Scandal Exposed by Researchers

The scandal erupted immediately after an NGO published a damning report. The detailed analysis by AI Forensics dropped like a real bombshell. They examined tens of thousands of generated images. The results were frankly disturbing to see.

Here is the breakdown of what the data actually revealed:

- Over 20,000 images generated by Grok were analyzed.

- More than half showed people in light clothing.

- 81% of these images depicted women.

- 2% of the images involved minors.

Public Outrage and Musk’s Position

These revelations triggered a massive outcry and a sharp grok ai nudity ban demand from the public. The backlash was immediate. Pressure piled up on X and its owner.

Elon Musk has often defended his vision of an “anti-woke,” freer AI. Initially, he seemed to shift the blame squarely onto the users themselves. He claimed the AI simply respects local laws.

That position became impossible to hold. Especially facing regulators.

The Global Regulatory Squeeze

And speaking of regulators, they didn’t wait to react. The pressure wasn’t just public; it became political and legal very quickly.

Governments Step In

Authorities across the globe wasted no time launching investigations. Specific legal actions kicked off in Europe, the United Kingdom, and the United States. The heat is definitely on now.

The principle is that Grok must respect the law in any given country. Any attempts to bypass this will be fixed as soon as they are discovered.

The legal hammer is dropping hard right now. Southeast Asian nations blocked access immediately. Western powers are threatening massive financial penalties. This table shows the specific grok ai nudity ban actions taken.

| Region/Country | Type of Action |

|---|---|

| Indonesia & Malaysia | Temporary Ban of the Tool |

| European Union | Threat of Fines/Suspension under Digital Services Act |

| United Kingdom | Investigation under Online Safety Act |

| California, USA | Attorney General Investigation for potential law violations |

What’s Next for AI Image Generation?

Big questions still hang over the tech industry. X implemented geo-blocking, but nobody knows if it actually works yet. Regulators remain on high alert for any slip-ups.

This mess forces a hard look at developer responsibility. If you want a safer experience, Nation AI offers a solid alternative. It delivers powerful AI with a simplified interface. It’s built to avoid these exact toxic pitfalls.

Ultimately, X had to fold under regulatory pressure, proving that even “rebellious” AI has limits. As tech giants scramble to fix their mess, the future clearly belongs to responsible platforms like Nation AI. Hopefully, we can now focus on innovation that actually helps us, rather than one that undresses us.