The key thing to remember: the term “Dark GPT” covers AIs devoid of ethical filters, ranging from marketing scams to genuine cybercriminal weapons such as FraudGPT. By facilitating the creation of malware and targeted phishing, these tools democratize cyberattacking. A clear understanding of these risks remains the best bulwark for strengthening digital security in the face of these automated threats.

Does the rumor of a dark gpt capable of hacking into your data in seconds make you break out in a cold sweat? We’re going to unravel the truth about these malicious artificial intelligences, distinguishing between simple marketing scams and the real tools used by cybercriminals. Stay with us to find out how these models really operate, and discover the essential countermeasures to avoid becoming the next target of these unbridled algorithms.

Demystifying the term Dark GPT: what are we really talking about?

More than a single definition

“Dark GPT” is not a unique product, but a catch-all term that is often misunderstood. Overall, it refers to AI operating without the usual ethical safeguards, which creates enormous confusion.

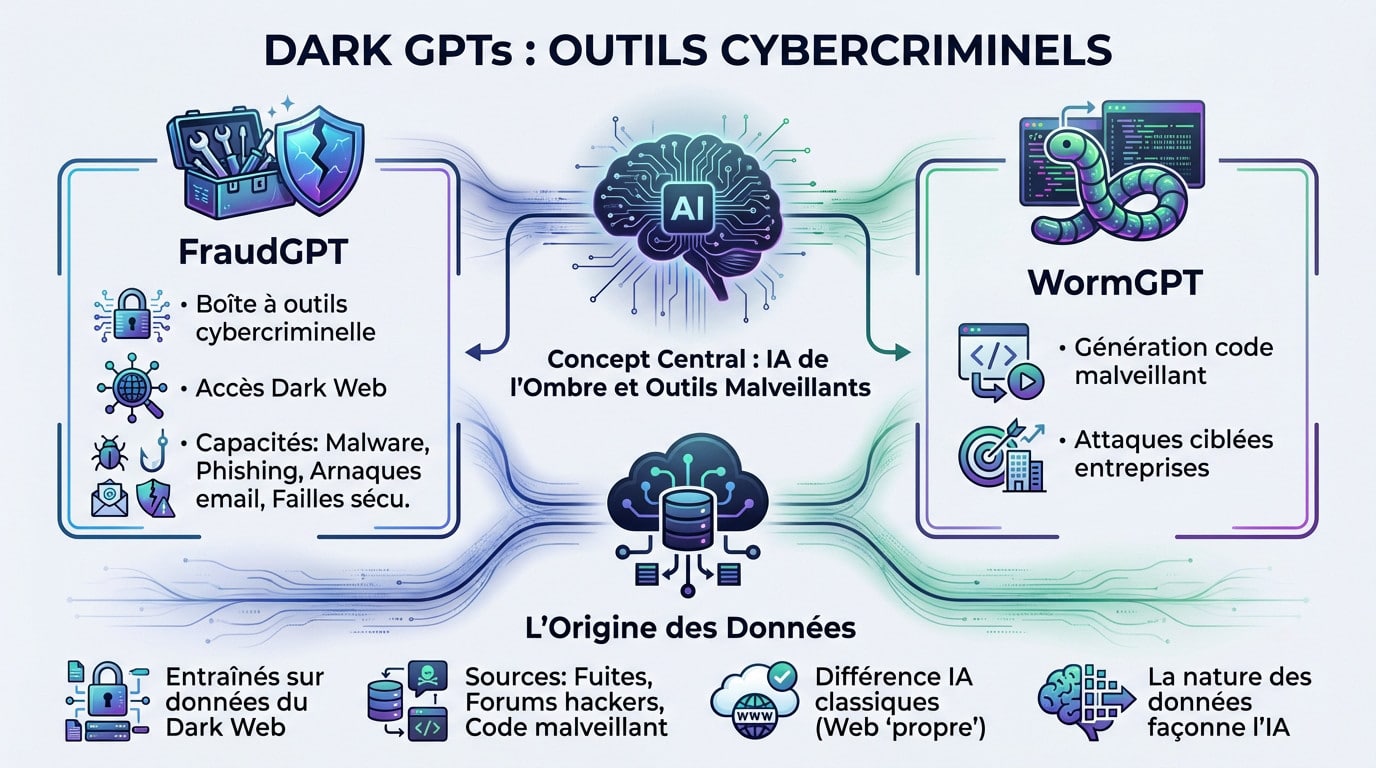

There are three main families behind this name. On the one hand, genuine cybercrime tools such as WormGPT. On the other, “unbridled” versions of consumer AI. And finally, aggressively marketed commercial products like FraudGPT, sold on the dark web.

It’s essential to differentiate between them to understand the real issues and dangers.

The difference with consumer ia

Put this in direct opposition to ChatGPT or Google Gemini. The fundamental distinction lies in thetotal absence of content filters and ethical restrictions.

Companies like OpenAI are investing heavily to restrict their AIs and prevent drift. Dark GPTs, on the other hand, are designed precisely to ignore or bypass these barriers, a process sometimes referred to as “jailbreaking”.

This lack of limits is what makes them both attractive to some and extremely dangerous.

The real Dark GPT: the tools of cybercriminals

You think artificial intelligence is limited to writing poems or summarizing meetings? Think again. A far more insidious threat is growing in the shadow of encrypted networks.

The danger is real and immediate. These tools enable any novice to launch terrifyingly sophisticated cyberattacks once reserved for seasoned experts. They exploit stolen data to maximize damage, transforming cybercrime into an automated, subscription-based industry. The ethical implications are dizzying: we’re facing a technology capable of amplifying chaos on an industrial scale. If you don’t take the measure of this evolution, your defense systems risk becoming obsolete before you’ve even detected the intrusion.

Now that the distinction is clearer, let’s look at the most worrying category: AIs designed specifically to cause harm.

FraudGPT and WormGPT, the spearheads

FraudGPT has established itself as the absolute reference for this underground movement. Sold exclusively on the black markets of the dark web, it presents itself as a comprehensive toolkit for any self-respecting cybercriminal.

Its technical capabilities are nothing short of frightening: writing complex malware and generating undetectable phishing pages. It designs bluffingly realistic email scams while actively scanning your networks for security holes. This is the true face of malicious AI.

Its sister company, WormGPT, focuses on generating malicious code for targeted attacks.

The origin of data: the dark web as a training ground

The striking power of these models lies in the very source of their learning. Their formidable effectiveness comes from the fact that they are trained on data from the dark web: massive leaks, hacker forum discussions and vast malicious code bases.

This is a far cry from classic AIs educated on literature or the “clean”, regulated web. Here, the toxic nature of the training data directly determines the offensive and harmful vocation of the final artificial intelligence.

This is what radically differentiates them from a simple conversational AI chatbot designed for customer service.

Fake” Dark GPT: between marketing and circumvention

But not all Dark GPTs are weapons of cyberwarfare. Others are far more inoffensive, or even a marketing scam.

The marketing promises of “limitless” versions

Products are advertised as “Dark GPT” on the web. It’s a marketing ploy to sell a supposedly “uncensored” AI. They seek to attract those frustrated with conventional models.

The reality behind the façade is often quite different. These services have a disastrous reputation. Very low Trustpilot reviews point to recurrent billing problems and abusive direct debits. Technical quality is often mediocre.

Mistrust these promises. Keep your money.

Jailbreak: forcing AI to disobey

Jailbreak” is defined in simple terms. It involves the use of clever prompts. You get a standard AI like ChatGPT to bypass its own security rules.

It’s a cat-and-mouse game. Developers are constantly correcting these technical flaws.

To navigate without risk, it’s essential to distinguish genuine criminal tools from simple circumvention tricks or commercial traps, as detailed in this comparative table of risks and objectives for each type.

| Type of Dark GPT | Main purpose | Access | Associated risk |

|---|---|---|---|

| Dark GPT Malware | Cybercrime (fraud, malware) | Dark web, specialized forums | High (illegal, dangerous) |

| Dark GPT Commercial | Marketing (selling “uncensored” AI) | Public websites, often pay-per-use | Medium (scams, poor service, billing problems) |

| Dark GPT (Jailbreak/Persona) | Bypass legitimate AI filters | Via specific prompts on platforms such as ChatGPT | Low to Medium (violation of terms of use, generation of inappropriate content) |

Concrete risks and how to protect against them

We often imagine hacking as the work of hooded geniuses typing green lines of code in a dark cellar. Wrong. With the emergence of models like FraudGPT and WormGPT, cybercrime has become totally “uberized”. Anyone with a few dollars in their pocket can now generate frighteningly sophisticated attacks without knowing anything about code. And therein lies the rub: the technical barrier has been broken. We’re not talking science-fiction scenarios, but an immediate reality where your digital identity is constantly in the crosshairs. These unfiltered AIs, fed with data from the dark web, don’t bother with ethics or morality. They just do it, period. If you think you’re safe because you’re not a multinational corporation, think again. The targets are all of us. Whether it’s a criminal tool or a simple trick, the Dark GPT phenomenon raises real security issues. Let’s take a look at the concrete threats and, above all, how not to become a victim.

Threats not to be underestimated

Forget theory or far-off scenarios. These dangers are not theoretical, they’re already real and in action.

- Phishing and AI fraud: Creation of ultra-personalized, credible scam emails and messages.

- Disinformation and deepfakes: Massive generation of fake news, images or videos to harm or manipulate opinion.

- Malware development: Assistance in creating viruses and other malicious code, even for inexperienced hackers.

The real problem lies in easy access. These AIs drastically lower the skill level required to launch sophisticated cyberattacks. That’s the real change.

A few basic safety precautions

We can’t prevent these tools from existing, that’s a fact. However, we can adopt better digital hygiene to become a much harder target.

- Always check the source: Be skeptical of unexpected emails, messages or offers, even if they seem well-written.

- Use robust security: complex, unique passwords and two-factor authentication (2FA) are your best allies.

- Limit your digital footprint: The less information you share publicly, the less material malicious AI agents have to target you with.

Never let your guard down in front of the screen. Vigilance and common sense are your best defense.

Basically, the term Dark GPT refers to both formidable hacking tools and simple marketing decoys. AI is merely an amplifier of intentions, good or bad. In the face of this new situation, don’t panic: your